What Is the "Chain of Thought" in Agent Models?

Recently, you might hAve heard several fancy terms floating around: interleaved thinking (claude), Thinking-in-Tools (MiniMax K2), Thinking in Tool-Use (DeepSeek V3.2), and Thought Signature (Gemini). At first glance, they sound complex—but in reality, they all describe the Same core idea: how a model’s internal reasoning is preserved and passed along within an Agent’s long-context execution loop.

The Basics: chain of thought in Chat vs. Agent Modes

By early 2025, “thinking models” like DeepSeek and GPT-o1 had already popularized the concept of chAIn-of-thought (CoT) reasoning:

In standard chatbots, the model first generates its internal reasoning (the “thinking”), then produces the final response.

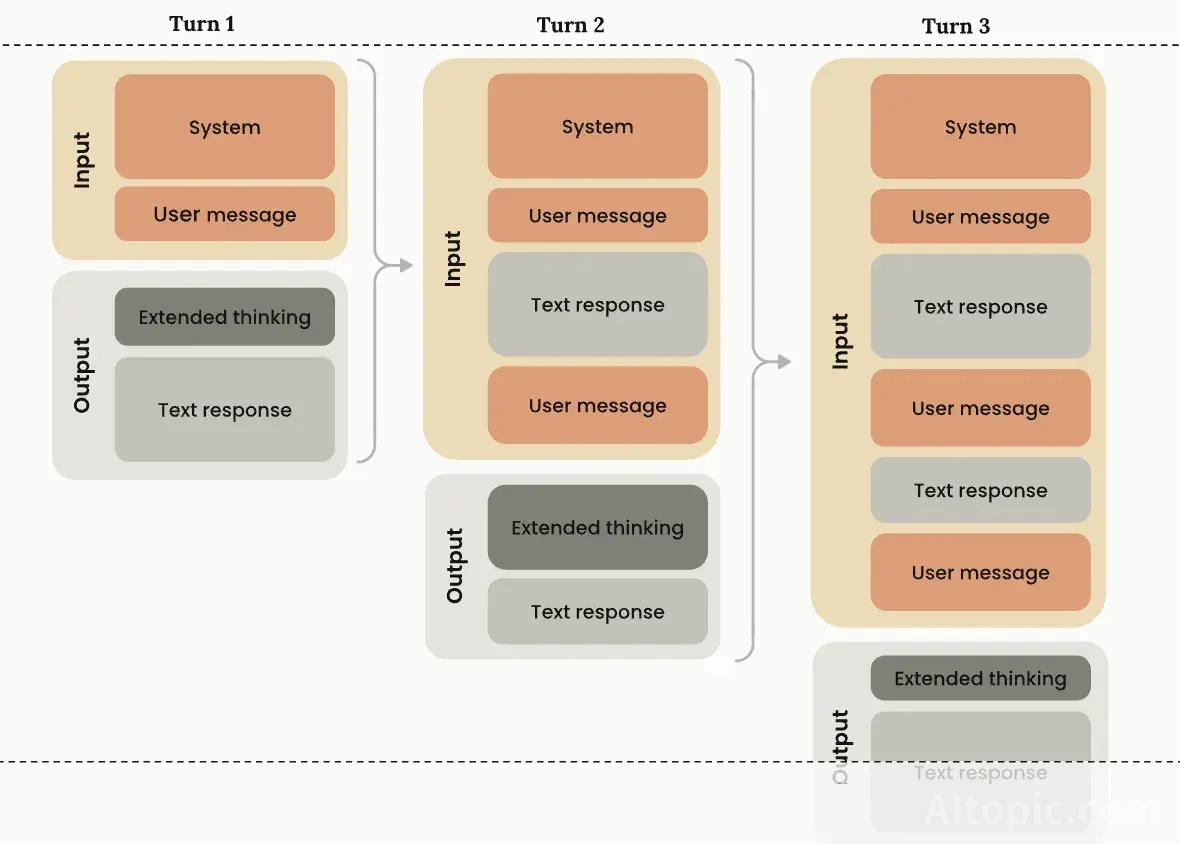

However, in multi-turn conversations, only the user input and the model’s final answer are retained in the context—the intermediate thinking steps are discarded after each turn.

Why? Because traditional chat is designed for single-turn problem solving. Keeping past reasoning would bloat the context, increase token usage, and potentially confuse the model with irrelevant history.

The Problem with Agents

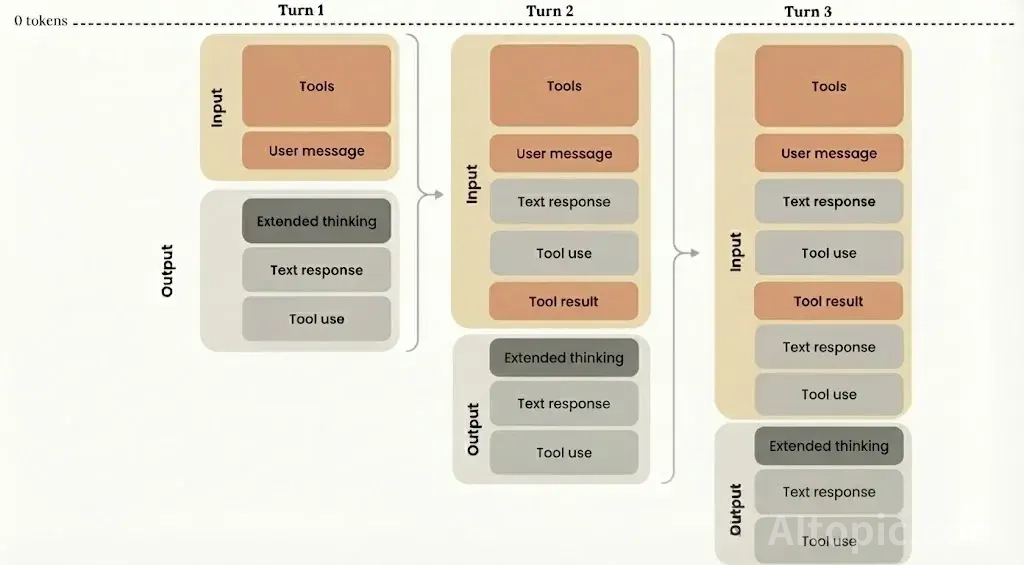

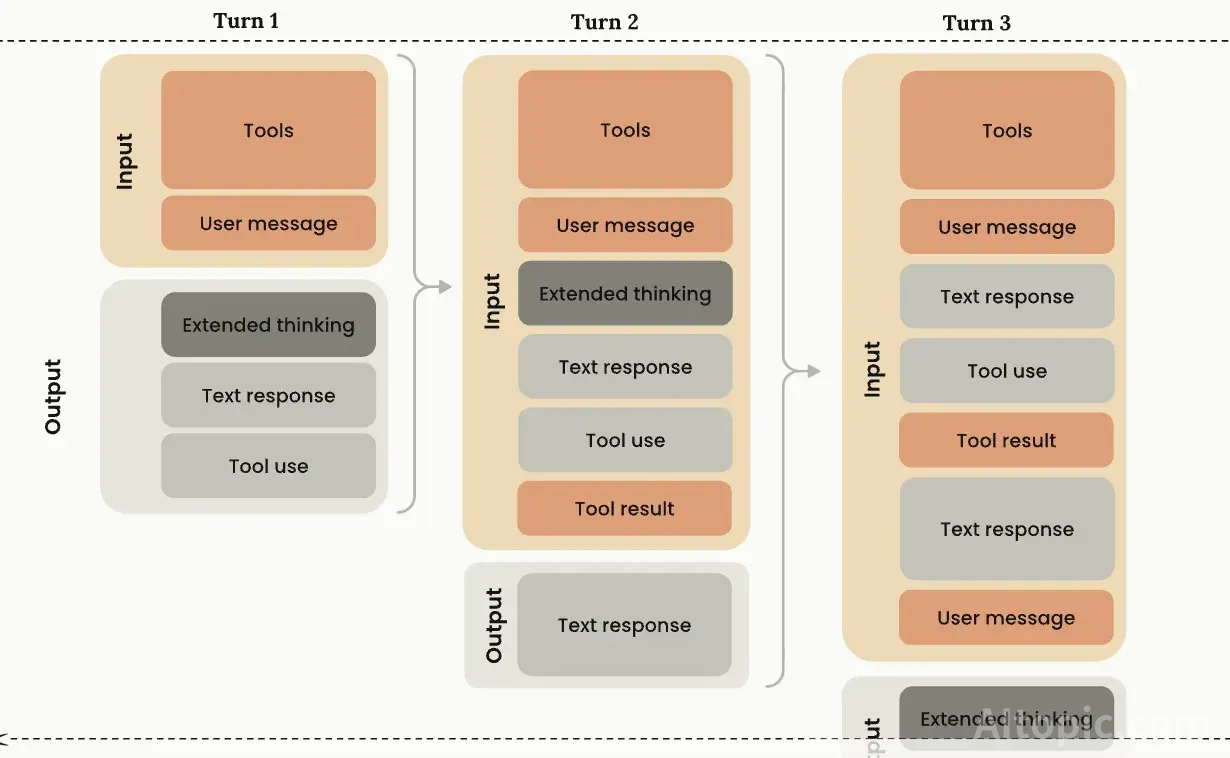

When we shift from chat to Agent mode, the interaction pattern changes dramatically:

User → Model calls tool → Tool returns result → Model calls next tool → … → task complete

This loop can span dozens of tool invocations for complex tasks (e.g., booking a flight: search → filter → view details → book → pay).

But here’s the catch: if the model discards its reasoning after each tool call, it has no memory of why it chose a particular tool or what the overall plan was. On every new step, it must re-infer the entire strategy from scratch—leading to drift, inconsistency, and errors that compound over time.

The Solution: Interleaved Thinking

To fix this, Claude 4 Sonnet introduced Interleaved Thinking—a simple but powerful idea:

Preserve the model’s reasoning alongside each tool call and feed it back into the context for future steps.

Now the Agent’s context looks like this:

User: Book a flight to Tokyo.

Model (thinking): I need to search available flights first.

Model (tool call): {"tool": "search_flights", "params": {...}}

Tool result: [list of flights]

Model (thinking): Among these, I should filter by price and duration...

Model (tool call): {"tool": "filter_flights", ...}

...This creates a continuous, coherent chain of reasoning that spans the entire task—dramatically improving planning stability and execution accuracy.

Other vendors use different names—Thinking-in-Tools, Thinking in Tool-Use, etc.—but the underlying mechanism is identical.

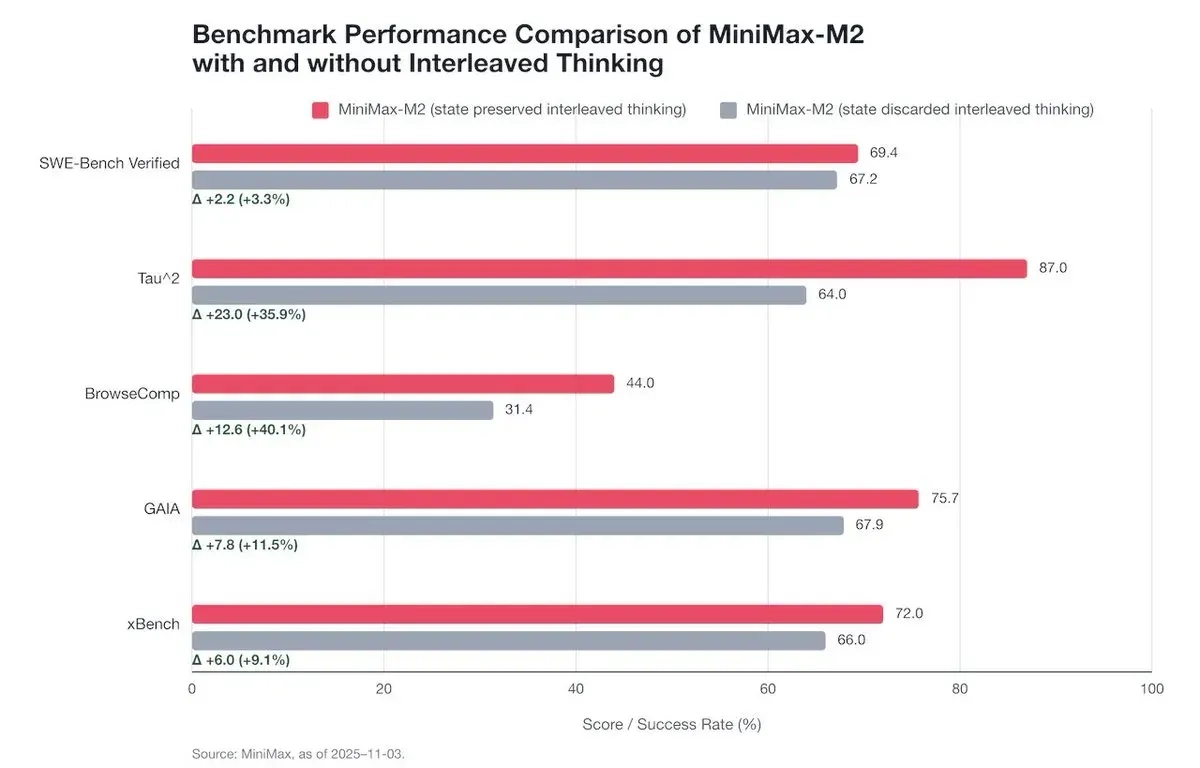

Does It Really Matter? Yes—Especially for Complex Tasks

MiniMax shared benchmark results showing significant performance gains on real-world Agent tasks like flight booking and e-commerce workflows—scenarios requiring many sequential, interdependent steps. When the model can “remember” its own logic at each stage, it stays aligned with the original plan and avoids erratic tool choices.

Can’t We Just Fake It in Engineering?

Technically, yes—you could manually wrap thinking content in XML tags or inject it as fake user messages. But this is suboptimal:

The model treats it as user input, not its own reasoning.

It wasn’t trained on such synthetic formats.

Performance relies purely on generalization, not purpose-built understanding.

In contrast, natively supported interleaved thinking means:

The model is trained on massive datasets of full reasoning trajectories.

It learns to expect and leverage its past thoughts.

Output stability and planning coherence improve systematically.

This is why native support is a key differentiator in modern Agent-optimized models.

Extra Layer: “Signatures” and Encryption

Some models go further by adding integrity checks or obfuscation to the thinking content:

1. Signed Thinking (Claude, Gemini)

The reasoning is cryptographically signed.

On the next turn, the model verifies the signature before using the prior thought.

Why? To prevent tampering. If an external system alters the thinking (even slightly), the model’s internal logic breaks—leading to failures or security risks.

More importantly: during training, the model assumes its thinking is authentic self-output, not arbitrary user input. Allowing edits blurs this boundary.

2. Encrypted/Redacted Thinking

Claude sometimes outputs thinking as

redacted_thinking: <encrypted_blob>.Gemini uses

thought_signature—a non-human-readable token.Benefits:

Prevents leakage of sensitive reasoning (e.g., for safety or IP protection).

Enables more compact, model-friendly internal representations.

Makes model distillation harder (similar to why GPT-o1 hides its full CoT).

Note: Most Chinese models (as of early 2026) don’t yet implement signing or encryption.

A New Challenge: Debugging Becomes Harder

This architecture introduces a trade-off:

In older Agent systems, if the model mistakenly called Tool B instead of Tool A, developers could manually override the tool call without side effects.

But with interleaved thinking, you can’t just swap the tool call—because the reasoning that led to it is now part of the immutable context. Forcing a different action breaks the logical chain, confusing the model in subsequent steps.

Future direction? Model providers may need to offer official “correction hooks”—e.g., a way to say “this tool choice was wrong; please re-reason from this point”—while maintaining chain integrity.

Conclusion

Interleaved Thinking (or whatever you call it) is not just a buzzword—it’s a foundational upgrade for Agent intelligence. By preserving and validating the model’s own reasoning across tool-call cycles, it enables robust, long-horizon planning that chat-style models simply can’t achieve.

As of early 2026, Claude 4 Sonnet and Gemini 3 enforce this pattern by default in Agent mode—and the performance gains, especially in multi-step real-world tasks, are undeniable.

The era of “thinking once and done” is over. For Agents, thinking continuously—and remembering how you thought—is the new baseline.

Comments & Questions (0)

No comments yet

Be the first to comment!